Some healthcare AI use cases carry significant ethical implications, such as automated prior authorization.

AI implementation raises critical questions about patient rights, transparency, and opt-out.

AI raises questions about liability for using, and potentially choosing not to use, AI applications.

As a company focused on healthcare and human services, we recognize that AI implementation in these sectors carries unique ethical implications. Healthcare organizations must navigate complex ethical territory alongside regulatory requirements.

This piece explores the ethical considerations that inherently shape responsible AI deployment in healthcare settings. These ethical dimensions are practical realities that organizations must address in their AI initiatives.

When AI systems contribute to medical decisions, who is responsible when something goes wrong? It is the healthcare providers using the system, the vendors distributing it, or the developers creating it?

This complex chain of responsibility mirrors emerging questions about autonomous vehicles. If a self-driving car makes an incorrect decision leading to an accident, responsibility might lie with the manufacturer, the software developer, the car owner, or some combination of these parties.

Healthcare AI faces similar but even higher-stakes questions: If an AI system recommends an incorrect treatment or misses a critical diagnosis, how do we determine whether the provider, the AI vendor, or the development team bears responsibility?

Healthcare organizations must balance the potential for system-wide improvements against the risk to individual patients and beneficiaries. An AI system might demonstrate excellent accuracy in aggregate while still producing outlier cases with life-altering consequences.

Rights-impacting or high-impact AI systems go beyond simple data processing by making consequential decisions that directly affect a patient’s access to care, treatment pathways, and insurance coverage.

For example, an AI algorithm might determine whether a patient qualifies for a specific treatment, receives insurance approval, or is prioritized on a medical waiting list. Such decisions carry profound implications for an individual’s health outcomes, financial well-being, and fundamental right to healthcare.

While AI systems may demonstrate high accuracy, we must carefully consider whether life-altering medical decisions should require human involvement to preserve empathy, nuanced understanding, and individualized care. The potential for these systems to introduce or perpetuate bias based on demographic factors, historical data patterns, or incomplete information raises significant ethical concerns about fairness and equal access to medical care.

The core principle of “do no harm” in healthcare requires careful consideration of how AI systems might affect vulnerable patients or unique cases that fall outside typical patterns.

Healthcare providers might soon face a new ethical question: Could they be responsible for not using available AI tools that improve patient care? As AI demonstrates its ability to enhance diagnosis and treatment, organizations may need to justify decisions not to adopt these technologies.

This ethical question becomes particularly relevant as AI tools prove their ability to reduce medical errors, identify diseases earlier, or improve treatment outcomes. If an AI system consistently outperforms human providers in detecting early-stage cancers, for example, could choosing not to implement such technology be considered a failure to provide the best available standard of care?

It’s realistically unlikely that the use of AI will be fully paused until these ethical issues have had sufficient discourse. The potential benefit and ROI is simply too high.

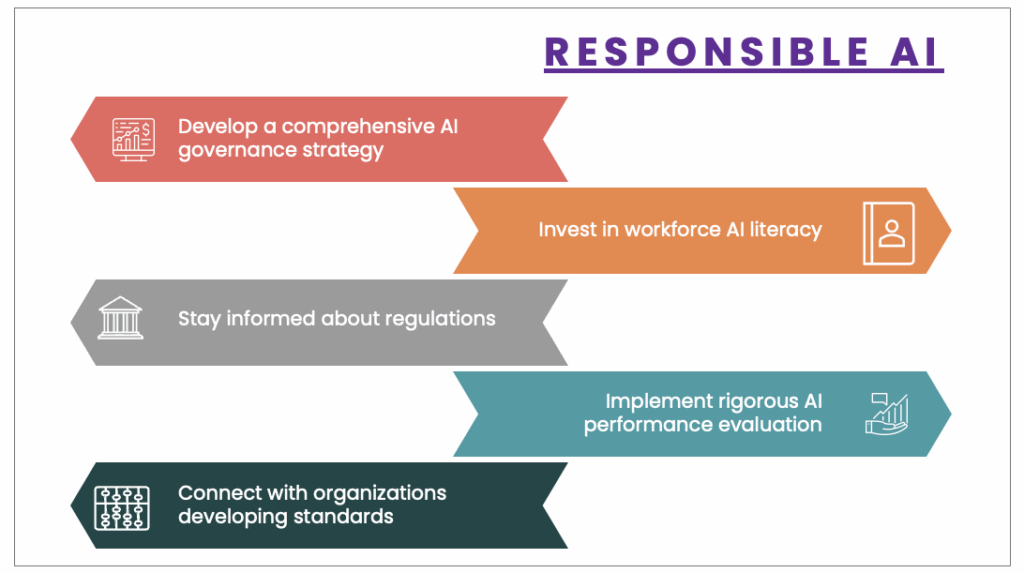

As technology continues to advance, healthcare organizations must take deliberate steps to ensure responsible AI implementation:

These strategic steps will enable healthcare organizations to navigate the complex ethical terrain of AI. Healthcare leaders can ensure technological advancements serve the best interests of patient care while maintaining the highest standards of medical ethics.

Now accepting applications for the Healthcare AI Scaffolding Initiative!