Keywell's Healthcare AI Regulation Tracker

Clear regulatory frameworks can help pave the way for AI adoption in healthcare. Our regulation tracker provides an overview of state and federal trends to underscore our technology engagements at Keywell.

Legal and Regulatory Oversight of Healthcare AI

Healthcare organizations and agencies face critical challenges: a projected shortage of up to 86,000 doctors by 2036, family physicians spending 17 hours a week on administrative tasks, administrative backlogs due to reduced budgets, and significant claim-payment delays. Well-placed AI offers practical solutions to these pain points (and more).

Smart automation with AI has the potential to reduce administrative workload, streamline prior authorization, strengthen payment integrity, and uncover insights in unstructured medical data, reallocating time to focus on outcomes and improve the experience of patients and beneficiaries.

Yet widespread AI adoption in healthcare requires clear regulatory frameworks. Legislation and regulation must protect providers, patients, and industry participants while enabling technological progress.

Healthcare organizations face multiple layers of AI oversight from federal and state regulators.

State laws increasingly require transparency and human review of AI-driven decisions.

Healthcare AI oversight develops through legal, regulatory, and industry channels.

Seven Key Areas of Healthcare AI Regulation

Most state and federal regulations for healthcare AI focus on seven primary areas of concern:

Use of AI for clinical decision-making.

Use of AI for prior authorization or medical necessity.

Use of AI for rights-impacting use cases (i.e., benefit determinations).

AI transparency for patients and beneficiaries.

Non-discrimination and fairness.

Data security and privacy (i.e., HIPAA).

AI as a medical device.

Healthcare AI Regulation: A Current Snapshot

Federal Healthcare AI Regulation

-

White House

White House

-

ONC

ONC

-

OCR

OCR

-

OMB

OMB

-

NIH

NIH

-

CMS

CMS

White House

President Biden's 2023 Executive Order directed HHS to develop guidelines for safe AI deployment in healthcare and public health sectors. However, in January 2025, President Trump revoked this order and issued new AI guidance focused on reducing regulatory requirements. The new executive order emphasizes technological innovation and directs a review of previous AI policies, including those addressing potential discrimination and bias in AI systems.

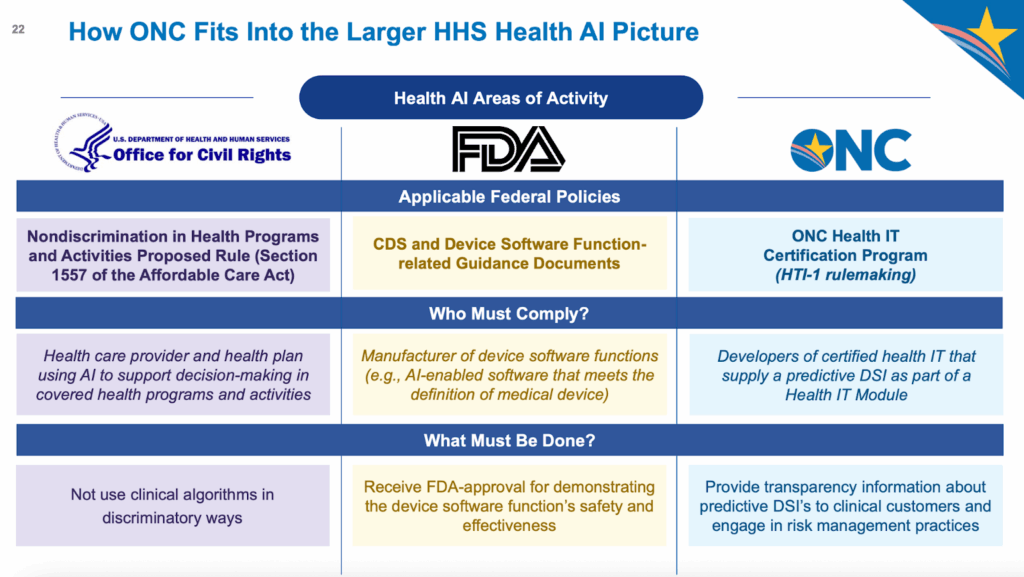

Office of National Coordinator of Health Technology (ONC)

The ONC HTI‑1 Final Rule, effective February 2024, introduced robust AI transparency and governance obligations for certified health IT supporting predictive Decision Support Interventions (DSIs). Developers must disclose 13 attributes for evidence-based DSIs and 31 for predictive DSIs to support user assessment of algorithm quality, fairness, validity, effectiveness, and safety (FAVES)

Office for Civil Rights (OCR)

HHS Office of Civil Rights issued rules in April 2024 expanding anti-discrimination protections. Healthcare organizations must now demonstrate their AI systems are not discriminatory — marking the first direct federal regulation of healthcare AI fairness.

Office of Management and Budget (OMB)

On April 3, 2025, the Office of Management and Budget (OMB) released Memorandum M-25-21, "Accelerating Federal Use of AI through Innovation, Governance, and Public Trust." The current administration promotes a pro-innovation message that differs in tone from their predecessors. However, the memo's guidelines and policies largely maintain existing regulatory themes.

The memo introduces a "high-impact AI" classification for tracking AI-use cases requiring heightened due diligence, which specifically include systems impacting health, insurance, and government services.

The OMB also requires federal agencies to designate Chief AI Officers within 60 days and continues the requirement for agencies to inventory and document their AI-use cases annually. These continuities suggest federal oversight of healthcare AI will remain robust despite the shift in regulatory emphasis.

National Institutes of Health (NIH)

In June 2025, NIH issued two RFIs to guide its evolving AI strategy. The first (NOT-OD-25-117) seeks public input on a strategic framework for advancing AI in biomedical research and public health, focusing on data readiness, trust, translation, and workforce development. The second (NOT-OD-25-118) addresses responsible use of generative AI with controlled-access human genomic data, aiming to identify risks, safeguards, and governance strategies. Both RFIs are open for comment through mid-July 2025.

Centers for Medicare and Medicaid Services (CMS)

The Centers for Medicare and Medicaid Services (CMS) also took definitive action on AI in coverage decisions. In a policy memo, the CMS declared that Medicare Advantage plans cannot rely solely on AI to determine coverage or terminate services. A qualified healthcare professional must review these decisions.

State Healthcare AI Regulation

State-Level Regulation

Many states have introduced legislation to regulate AI in healthcare, including laws that require coverage determinations to be reviewed by physicians or non-AI humans. Some of these regulations also require transparency and assurances of non-discrimination.

Some bills require reporting to the state. For example, California requires reporting of "Critical Harm" incidents, while Colorado requires companies using high-risk AI systems to document and disclose detailed information about the system's purpose, risks, and impacts on decisions.

Many of these state bills defer action by creating AI task forces or innovation councils instead of establishing immediate regulations. This reflects the tension between enabling AI innovation and ensuring proper oversight.

Our State Regulation AI tracker compiles updated state legislation and regulatory activity that impacts healthcare and human services, categorized by common themes such as transparency, limits on use of AI for prior authorization, and nondiscrimination.

Note: The following table and all content was generated using web search and AI data extraction. Please verify the information before use.

FDA Regulation of Medical Devices

FDA Regulation of Medical Devices

The FDA approaches AI-enabled medical devices through a risk-based framework. Their regulatory process examines an algorithm's intended use, development process, and clinical validation.

Software as a Medical Device (SaMD) faces particular scrutiny, especially when AI systems adapt or learn from new data. The FDA requires manufacturers to submit plans for monitoring real-world performance and managing algorithm updates. January 2025 draft guidance (currently under public comment) provides specific recommendations for AI lifecycle management and marketing submissions. The guidance emphasizes performance monitoring, transparency, and clear documentation of model development and validation.

Three Paths to Healthcare AI Policy and Oversight

The advancement of healthcare AI creates unique regulatory challenges, as new use cases and potential risks emerge daily. Oversight is developing through three interconnected channels:

Legal Precedent

Court decisions and settlements are setting standards for AI accountability and transparency. In 2024, the Texas Attorney General's settlement over overstated AI accuracy claims demonstrated heightened scrutiny of healthcare AI companies' marketing practices.

Federal and State Regulation

While agencies like the FDA oversee clinical decision support systems, regulatory frameworks must evolve to address emerging technologies and use cases.

See our breakdown.

Industry Standards

Organizations like the Coalition for Health AI (CHAI), Trustworthy and Responsible AI Network (TRAIN) and the Health AI Partnership (HAIP) are developing voluntary standards and "AI nutrition labels" to provide transparency about model capabilities and limitations. The HAIP / CHAI model has been adopted by the Joint Commission and accreditation processes are beginning in 2025.

Implementing Healthcare AI with Confidence

Healthcare AI holds tremendous potential to address industry challenges, from provider shortages to administrative burdens. However, organizations must balance innovation with compliance as regulations develop at state and federal levels.

Early awareness of these requirements during the AI implementation process helps ensure sustainable, compliant solutions.

Keywell's healthcare AI specialists stay current with regulatory developments to guide organizations through implementation decisions. Contact us to discuss your AI strategy and learn how we help healthcare organizations navigate these requirements.

Navigating the Ethical Landscape of AI in Healthcare

Beyond regulatory compliance, healthcare organizations must also consider crucial ethical implications in AI deployment. See our related article on ethical considerations in healthcare AI for a deeper exploration of these issues.

This article is intended for informational purposes only and does not constitute legal advice. Organizations should consult qualified legal counsel to address their specific regulatory obligations regarding AI in healthcare.

Learn more about Keywell

How can we support your AI strategy? Contact us at info@keywell.ai or schedule a time on the calendar below.